Launching threads is easy; making them work cooperatively is not. The hard part of designing a multithreaded program is figuring out where concurrently running threads might clash and using thread synchronization logic to prevent clashes from occurring. You provide the logic; the .NET Framework provides the synchronization primitives.

Here’s a list of the thread synchronization classes featured in the FCL. All are members of the System.Threading namespace:

|

Class |

Description |

|

AutoResetEvent |

Blocks a thread until another thread sets the event |

|

Interlocked |

Enables simple operations such as incrementing and decrementing integers to be performed in a thread-safe manner |

|

ManualResetEvent |

Blocks one or more threads until another thread sets the event |

|

Monitor |

Prevents more than one thread at a time from accessing a resource |

|

Mutex |

Prevents more than one thread at a time from accessing a resource and has the ability to span application and process boundaries |

|

ReaderWriterLock |

Enables multiple threads to read a resource simultaneously but prevents overlapping reads and writes as well as overlapping writes |

Each of these synchronization classes will be described in due time. But first, here’s an example demonstrating why thread synchronization is so important.

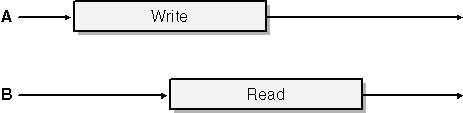

Suppose you write an application that launches a background thread for the purpose of gathering data from a data source—perhaps an online connection to another server or a physical device on the host system. As data arrives, the background thread writes it to a linked list. Furthermore, suppose that other threads in the application read the linked list and process the data contained therein. Figure 14-4 illustrates what might happen if the threads that read and write the linked list aren’t synchronized. Most of the time, you get lucky: reads and writes don’t overlap and the code works fine. But if by chance a read and write occur at the same time, it’s entirely possible that the reader thread will catch the linked list in an inconsistent state as it’s being updated by the writer thread. The results are unpredictable. The reader thread might read invalid data, it might throw an exception, or it might suffer no ill effects whatsoever. The point is you don’t know, and software should never be left to chance.

Figure 14-4

Figure 14-4

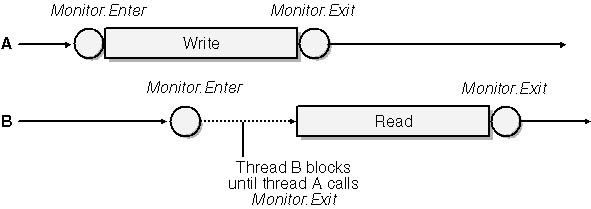

Figure 14-5 illustrates how a Monitor object can solve the problem by synchronizing access to the linked list. Each thread checks with a Monitor before accessing the linked list. The Monitor serializes access to the linked list, turning what would have been an overlapping read and write into a synchronized read and write. The linked list is now protected, and neither thread has to worry about interfering with the other.

Figure 14-5

Figure 14-5

The simplest way to synchronize threads is to use the System.Threading.Interlocked class. Interlocked has four static methods that you can use to perform simple operations on 32-bit and 64-bit values and do so in a thread-safe manner:

|

Method |

Purpose |

|

Increment |

Increments a 32-bit or 64-bit value |

|

Decrement |

Decrements a 32-bit or 64-bit value |

|

Exchange |

Exchanges two 32-bit or 64-bit values |

|

CompareExchange |

Compares two 32-bit or 64-bit values and replaces one with a third if the two are equal |

The following example increments a 32-bit integer named count in a thread-safe manner:

Interlocked.Increment?(ref?count);

The next example decrements the same integer:

Interlocked.Decrement?(ref?count);

Routing all accesses to a given variable through the Interlocked class ensures that two threads can’t touch the variable at the same time, even if the threads are running on different CPUs.

Monitors in the .NET Framework are similar to critical sections in Windows. They synchronize concurrent thread accesses so that an object, a linked list, or some other resource can be manipulated only by one thread at a time. In other words, they support mutually exclusive access to a guarded resource. Monitors are represented by the FCL’s Monitor class.

Monitor’s two most important methods are Enter and Exit. The former claims a lock on the resource that the monitor guards and is called prior to accessing the resource. If the lock is currently owned by another thread, the thread that calls Enter blocks—that is, is taken off the processor and placed in a very efficient wait state—until the lock comes free. Exit frees the lock after the access is complete so that other threads can access the resource.

As an aid in understanding how monitors are used and why they exist, consider the code in Figure 14-6. Called BadNews, it’s a multithreaded console application. At startup, it initializes a buffer of bytes with the values 1 through 100. Then it launches a “writer” thread that for 10 seconds randomly swaps values in the buffer, and 10 “reader” threads that sum up all the values in the buffer. Because the sum of all the numbers from 1 to 100 is 5050, the reader threads should always come up with that sum. That’s the theory, anyway. The problem is that the reader and writer threads aren’t synchronized. Given enough iterations, it’s highly likely that a reader thread will catch the buffer in an inconsistent state and arrive at the wrong sum. In this sample program, a reader thread writes an error message to the console window if it computes a sum other than 5050—proof positive that a synchronization error has occurred.

using?System;

using?System.Threading;

class?MyApp

{

????static?Random?rng?=?new?Random?();

????static?byte[]?buffer?=?new?byte[100];

????static?Thread?writer;

????static?void?Main?()

????{

????????//?Initialize?the?buffer

????????for?(int?i=0;?i<100;?i++)

????????????buffer[i]?=?(byte)?(i?+?1);

????????//?Start?one?writer?thread

????????writer?=?new?Thread?(new?ThreadStart?(WriterFunc));

????????writer.Start?();

????????//?Start?10?reader?threads

????????Thread[]?readers?=?new?Thread[10];

????????for?(int?i=0;?i<10;?i++)?{

????????????readers[i]?=?new?Thread?(new?ThreadStart?(ReaderFunc));

????????????readers[i].Name?=?(i?+?1).ToString?();

????????????readers[i].Start?();

????????}

????}

????static?void?ReaderFunc?()

????{

????????//?Loop?until?the?writer?thread?ends

????????for?(int?i=0;?writer.IsAlive;?i++)?{

????????????int?sum?=?0;

????????????//?Sum?the?values?in?the?buffer

????????????for?(int?k=0;?k<100;?k++)

????????????????sum?+=?buffer[k];

????????????//?Report?an?error?if?the?sum?is?incorrect

????????????if?(sum?!=?5050)?{

????????????????string?message?=?String.Format?("Thread?{0} " +

??????????????????? "reports?a?corrupted?read?on?iteration?{1}",

?????????????????????Thread.CurrentThread.Name,?i?+?1);

????????????????Console.WriteLine?(message);

????????????????writer.Abort?();

????????????????return;

????????????}

????????}

????}

????static?void?WriterFunc?()

????{

????????DateTime?start?=?DateTime.Now;

????????//?Loop?for?up?to?10?seconds

????????while?((DateTime.Now?-?start).Seconds?<?10)?{

????????????int?j?=?rng.Next?(0,?100);

????????????int?k?=?rng.Next?(0,?100);

????????????Swap?(ref?buffer[j],?ref?buffer[k]);

????????}

????}

????static?void?Swap?(ref?byte?a,?ref?byte?b)

????{

????????byte?tmp?=?a;

????????a?=?b;

????????b?=?tmp;

????}

}

Give BadNews a try by running it a few times. The following command compiles the source code file into an EXE:

csc?badnews.cs

And this command runs the resulting EXE:

badnews

In all likelihood, one or more reader threads will report an error, as shown in Figure 14-7. Once a reader thread encounters an error, it aborts the writer thread, causing all the other reader threads to shut down, too. Run the program 10 times and you’ll get 10 different sets of results, graphically illustrating the unpredictability and hard-to-reproduce nature of thread synchronization errors.

Figure 14-7

Figure 14-7

Figure 14-8 contains a corrected version of BadNews.cs. Monitor.cs uses monitors to prevent reader and writer threads from accessing the buffer at the same time. It runs for the full 10 seconds without reporting any errors. Changes are highlighted in boldface type. Before accessing the buffer, Monitor.cs threads acquire a lock by calling Monitor.Enter:

Monitor.Enter?(buffer);

After reading from or writing to the buffer, each thread releases the lock it acquired by calling Monitor.Exit:

Monitor.Exit?(buffer);

Calls to Exit are enclosed in finally blocks to ensure that they’re executed even in the face of inopportune exceptions. Always use finally blocks to exit monitors or else you run the risk of orphaning a lock and causing other threads to hang indefinitely.

using?System;

using?System.Threading;

class?MyApp

{

????static?Random?rng?=?new?Random?();

????static?byte[]?buffer?=?new?byte[100];

????static?Thread?writer;

????static?void?Main?()

????{

????????//?Initialize?the?buffer

????????for?(int?i=0;?i<100;?i++)

????????????buffer[i]?=?(byte)?(i?+?1);

????????//?Start?one?writer?thread

????????writer?=?new?Thread?(new?ThreadStart?(WriterFunc));

????????writer.Start?();

????????//?Start?10?reader?threads

????????Thread[]?readers?=?new?Thread[10];

????????for?(int?i=0;?i<10;?i++)?{

????????????readers[i]?=?new?Thread?(new?ThreadStart?(ReaderFunc));

????????????readers[i].Name?=?(i?+?1).ToString?();

????????????readers[i].Start?();

????????}

????}

????static?void?ReaderFunc?()

????{

????????//?Loop?until?the?writer?thread?ends

????????for?(int?i=0;?writer.IsAlive;?i++)?{

????????????int?sum?=?0;

????????????//?Sum?the?values?in?the?buffer

????????????Monitor.Enter?(buffer);

????????????try?{

????????????????for?(int?k=0;?k<100;?k++)

????????????????????sum?+=?buffer[k];

????????????}

????????????finally?{

????????????????Monitor.Exit?(buffer);

????????????}

????????????//?Report?an?error?if?the?sum?is?incorrect

????????????if?(sum?!=?5050)?{

????????????????string?message?=?String.Format?("Thread?{0} " +

??????????????????? "reports?a?corrupted?read?on?iteration?{1}",

?????????????????????Thread.CurrentThread.Name,?i?+?1);

????????????????Console.WriteLine?(message);

????????????????writer.Abort?();

????????????????return;

????????????}

????????}

????}

????static?void?WriterFunc?()

????{

????????DateTime?start?=?DateTime.Now;

????????//?Loop?for?up?to?10?seconds

????????while?((DateTime.Now?-?start).Seconds?<?10)?{

????????????int?j?=?rng.Next?(0,?100);

????????????int?k?=?rng.Next?(0,?100);

????????????Monitor.Enter?(buffer);

????????????try?{

????????????????Swap?(ref?buffer[j],?ref?buffer[k]);

????????????}

????????????finally?{

????????????????Monitor.Exit?(buffer);

????????????}

????????}

????}

????static?void?Swap?(ref?byte?a,?ref?byte?b)

????{

????????byte?tmp?=?a;

????????a?=?b;

????????b?=?tmp;

????}

}

The previous section shows one way to use monitors, but there’s another way, too: C#’s lock keyword (in Visual Basic .NET, SyncLock). In C#, the statements

lock?(buffer)?{

??...

}

are functionally equivalent to

Monitor.Enter?(buffer);

try?{

??...

}

finally?{

????Monitor.Exit?(buffer);

}

The CIL generated by these two sets of statements are nearly identical. Figure 14-9 shows the code in Figure 14-8 rewritten to use lock. The lock keyword makes the code more concise and also ensures the presence of a finally block to make sure the lock is released. You don’t see the finally block, but it’s there. Check the CIL if you want to see for yourself.

using?System;

using?System.Threading;

class?MyApp

{

????static?Random?rng?=?new?Random?();

????static?byte[]?buffer?=?new?byte[100];

????static?Thread?writer;

????static?void?Main?()

????{

????????//?Initialize?the?buffer

????????for?(int?i=0;?i<100;?i++)

????????????buffer[i]?=?(byte)?(i?+?1);

????????//?Start?one?writer?thread

????????writer?=?new?Thread?(new?ThreadStart?(WriterFunc));

????????writer.Start?();

????????//?Start?10?reader?threads

????????Thread[]?readers?=?new?Thread[10];

????????for?(int?i=0;?i<10;?i++)?{

????????????readers[i]?=?new?Thread?(new?ThreadStart?(ReaderFunc));

????????????readers[i].Name?=?(i?+?1).ToString?();

????????????readers[i].Start?();

????????}

????}

????static?void?ReaderFunc?()

????{

????????//?Loop?until?the?writer?thread?ends

????????for?(int?i=0;?writer.IsAlive;?i++)?{

????????????int?sum?=?0;

????????????//?Sum?the?values?in?the?buffer

????????????lock?(buffer)?{

????????????????for?(int?k=0;?k<100;?k++)

????????????????????sum?+=?buffer[k]; ????????????} ????????????//?Report?an?error?if?the?sum?is?incorrect ????????????if?(sum?!=?5050)?{ ????????????????string?message?=?String.Format?("Thread?{0} " + ??????????????????? "reports?a?corrupted?read?on?iteration?{1}", ?????????????????????Thread.CurrentThread.Name,?i?+?1); ????????????????Console.WriteLine?(message); ????????????????writer.Abort?(); ????????????????return; ????????????} ????????} ????} ????static?void?WriterFunc?() ????{ ????????DateTime?start?=?DateTime.Now; ????????//?Loop?for?up?to?10?seconds ????????while?((DateTime.Now?-?start).Seconds?<?10)?{ ????????????int?j?=?rng.Next?(0,?100); ????????????int?k?=?rng.Next?(0,?100); ????????????lock?(buffer)?{ ????????????????Swap?(ref?buffer[j],?ref?buffer[k]); ????????????} ????????} ????} ????static?void?Swap?(ref?byte?a,?ref?byte?b) ????{ ????????byte?tmp?=?a; ????????a?=?b; ????????b?=?tmp; ????} }

Preventing Monitor.Enter from blocking if the lock is owned by another thread is impossible. That’s why Monitor includes a separate method named TryEnter. TryEnter returns, regardless of whether the lock is available. A return value equal to true means the caller acquired the lock and can safely access the resource guarded by the monitor. False means the lock is currently owned by another thread:

if?(Monitor.TryEnter?(buffer))?{

????//?TODO:?Acquired?the?lock;?access?the?buffer

}

else?{

????//?TODO:?Couldn't?acquire?the?lock;?try?again?later

}

The fact that TryEnter, unlike Enter, returns if the lock isn’t free affords the caller the opportunity to attend to other matters rather than sit idle, waiting for a lock to come free.

TryEnter comes in a version that accepts a time-out value and waits for up to the specified number of milliseconds to acquire the lock:

if?(Monitor.TryEnter?(buffer,?2000))?{

????//?TODO:?Acquired?the?lock;?access?the?buffer

}

else?{

????//?TODO:?Waited?2?seconds?but?couldn't?acquire?the?lock;

????//?try?again?later

}

TryEnter also accepts a TimeSpan value in lieu of a number of milliseconds.

Monitor includes static methods named Wait, Pulse, and PulseAll that are functionally equivalent to Java’s Object.wait, Object.notify, and Object.notifyAll methods. Wait temporarily relinquishes the lock held by the calling thread and blocks until the lock is reacquired. The Pulse and PulseAll methods notify threads blocking in Wait that a thread has updated the object guarded by the lock. Pulse queues up the next waiting thread and allows it to run when the thread that’s currently executing releases the lock. PulseAll gives all waiting threads the opportunity to run.

To picture how waiting and pulsing work, consider the following thread methods. The first one places items in a queue at half-second intervals and is executed by thread A:

static?void?WriterFunc?()

{

????string[]?strings?=?new?string[]?{ "One", "Two", "Three" };

????lock?(queue)?{

????????foreach?(string?item?in?strings)?{

????????????queue.Enqueue?(item);

????????????Monitor.Pulse?(queue);

????????????Monitor.Wait?(queue);

????????????Thread.Sleep?(500);

????????}

????}

}

The second method reads items from the queue as they come available and is executed by thread B:

static?void?ReaderFunc?()

{

????lock?(queue)?{

????????while?(true)?{

????????????if?(queue.Count?>?0)?{

????????????????while?(queue.Count?>?0)?{

????????????????????string?item?=?(string)?queue.Dequeue?();

????????????????????Console.WriteLine?(item);

????????????????}

????????????????Monitor.Pulse?(queue);

????????????}

????????????Monitor.Wait?(queue);

????????}

????}

}

On the surface, it appears as if only one of these methods could execute at a time. After all, both attempt to acquire a lock on the same object. Nevertheless, the methods execute concurrently. Here’s how.

Imagine that ReaderFunc (thread B) acquires the lock first. It finds that the queue contains no items and calls Monitor.Wait. WriterFunc, which executes on thread A, is currently blocking in the call to Monitor.Enter generated from the lock statement. It comes unblocked, adds an item to the queue, and calls Monitor.Pulse. That readies thread B for execution. Thread A then calls Wait itself, allowing thread B to awake from its call to Monitor.Wait and retrieve the item that thread A placed in the queue. Afterward, thread B calls Monitor.Pulse and Monitor.Wait and the whole process starts over again.

Personally, I don’t find this architecture very exciting. There are other ways to synchronize threads on queues (AutoResetEvents, for example) that are easier to write and maintain and that don’t rely on nested locks. Wait, Pulse, and PulseAll might be very useful, however, for porting Java code to the .NET Framework.

Curious to know how Monitor objects work? Here’s a short synopsis—and one big reason why you should care.

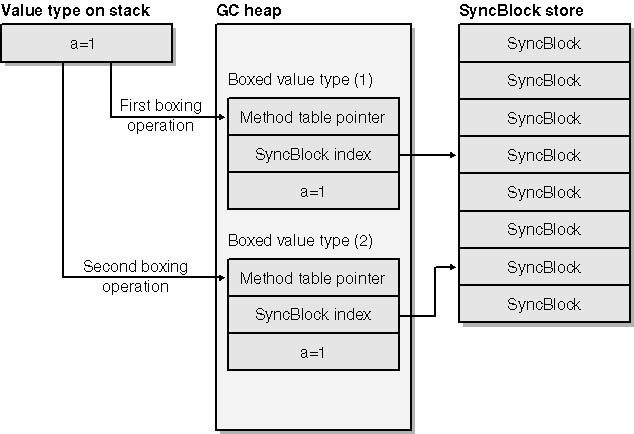

Monitor’s Enter and Exit methods accept a reference to an Object or an Object-derivative—in other words, the address of a reference type allocated on the garbage-collected (GC) heap. Every object on the GC heap has two overhead members associated with it:

-

A method table pointer containing the address of the object’s method table

-

A SyncBlock index referencing a SyncBlock created by the .NET Framework.

The method table pointer serves the same purpose as a virtual function table, or “vtable,” in C++. A SyncBlock is the moral equivalent of a Windows mutex or critical section and is the physical data structure that makes up the lock manipulated by Monitor.Enter and Monitor.Exit. SyncBlocks aren’t created unless needed to avoid undue overhead on the system.

When you call Monitor.Enter, the framework checks the SyncBlock of the object identified in the method call. If the SyncBlock indicates that another thread owns the lock, the framework blocks the calling thread until the lock becomes available. Monitor.Exit frees the lock by updating the object’s SyncBlock. The relationship between objects allocated on the GC heap and SyncBlocks is diagrammed in Figure 14-10.

Figure 14-10

Figure 14-10

Why is understanding how monitors work important? Because that knowledge can prevent you from committing a grievous error that is all too easy to make. Before I say more, can you spot the bug in the following code?

int?a?=?1;

??.

??.

??.

Monitor.Enter?(a);

try?{

????a?*=?3;

}

finally?{

????Monitor.Exit?(a);

}

Check the CIL that the C# compiler generates from this code and you’ll find that it contains two BOX instructions. Monitor.Enter and Monitor.Exit operate on reference types. Since variable a is a value type, it can’t be passed directly to Enter and Exit; it must be boxed first. The C# compiler obligingly emits two BOX instructions—one to box the value type passed to Enter, and another to box the value type passed to Exit. The two boxing operations create two different objects on the heap, each containing the same value but each with its own SyncBlock index pointing to a different SyncBlock (Figure 14-11). See the problem? The code compiles just fine, but it throws an exception at run time because it calls Exit on a lock that hasn’t been acquired. Be thankful for the exception, because otherwise the code might seem to work when in fact it provides no synchronization at all.

Figure 14-11

Figure 14-11

Is there a solution? You bet. Manually box the value type and pass the resultant object reference to both Enter and Exit:

int?a;

object?o?=?a;

??.

??.

??.

Monitor.Enter?(o);

try?{

????a?*=?3;

}

finally?{

????Monitor.Exit?(o);

}

If you do this in multiple threads (and you will; otherwise you wouldn’t be using a monitor in the first place), be sure to box the value type one time and use the resulting reference in calls to Enter and Exit in all threads. If the value type you’re synchronizing access to is a field, declare a corresponding Object field and store the boxed reference there. Then use that field to acquire the reference passed to Enter and Exit. (Technically, the object reference you pass to Enter and Exit doesn’t have to be a boxed version of the value type you’re guarding. All that matters is that you pass the same reference to both methods.)

The fact that the C# compiler automatically boxes value types passed to Monitor.Enter and Monitor.Exit is another reason that using C#’s lock keyword is superior to calling Monitor.Enter and Monitor.Exit directly. The following code won’t compile because the C# compiler knows that a is a value type and that boxing the value type won’t yield the desired result:

lock?(a)?{

????a?*=?3;

}

However, if o is an Object representing a boxed version of a, the code compiles just fine:

lock?(o)?{

????a?*=?3;

}

An ounce of prevention is worth a pound of cure. Be aware that value types require special handling when used with monitors and you’ll avoid one of the .NET Framework’s nastiest traps.

Reader/writer locks are similar to monitors in that they prevent concurrent threads from accessing a resource simultaneously. The difference is that reader/writer locks are a little smarter: they permit multiple threads to read concurrently, but they prevent overlapping reads and writes as well as overlapping writes. For situations in which reader threads outnumber writer threads, reader/writer locks frequently offer better performance than monitors. No harm can come, after all, from allowing several threads to read the same location in memory at the same time.

Windows lacks a reader/writer lock implementation, but the .NET Framework class library provides one in the class named ReaderWriterLock. To use it, set up one reader/writer lock for each resource that you want to guard. Have reader threads call AcquireReaderLock before accessing the resource and ReleaseReaderLock after the access is complete. Have writer threads call AcquireWriterLock before accessing the resource and ReleaseWriterLock afterward. AcquireReaderLock blocks if the lock is currently owned by a writer thread but not if it’s owned by other reader threads. AcquireWriterLock blocks if the lock is owned by anyone. Consequently, multiple threads can read the resource concurrently, but only one thread at a time can write to it and it can’t write if another thread is reading.

That’s ReaderWriterLock in a nutshell. The application in Figure 14-12 demonstrates how these concepts translate to real-world code. It’s the same basic application used in the monitor samples, but this time the buffer is protected by a reader/writer lock instead of a monitor. A reader/writer lock makes sense when reader threads outnumber writer threads 10 to 1 as they do in this sample.

Observe that calls to ReleaseReaderLock and ReleaseWriterLock are enclosed in finally blocks to be absolutely certain that they’re executed. Also, the Timeout.Infinite passed to the Acquire methods indicate that the calling thread is willing to wait for the lock indefinitely. If you prefer, you can pass in a time-out value expressed in milliseconds or as a TimeSpan value, after which the call will return if the lock hasn’t been acquired. Unfortunately, neither AcquireReaderLock nor AcquireWriterLock returns a value indicating whether the call returned because the lock was acquired or because the time-out period expired. For that, you must read the ReaderWriterLock’s IsReaderLockHeld or IsWriterLockHeld property. The former returns true if the calling thread holds a reader lock and false if it does not. The latter does the same for writer locks.

using?System;

using?System.Threading;

class?MyApp

{

????static?Random?rng?=?new?Random?();

????static?byte[]?buffer?=?new?byte[100];

????static?Thread?writer;

????static?ReaderWriterLock?rwlock?=?new?ReaderWriterLock?();

????static?void?Main?()

????{

????????//?Initialize?the?buffer

????????for?(int?i=0;?i<100;?i++)

????????????buffer[i]?=?(byte)?(i?+?1);

????????//?Start?one?writer?thread

????????writer?=?new?Thread?(new?ThreadStart?(WriterFunc));

????????writer.Start?();

????????//?Start?10?reader?threads

????????Thread[]?readers?=?new?Thread[10];

????????for?(int?i=0;?i<10;?i++)?{

????????????readers[i]?=?new?Thread?(new?ThreadStart?(ReaderFunc));

????????????readers[i].Name?=?(i?+?1).ToString?();

????????????readers[i].Start?();

????????}

????}

????static?void?ReaderFunc?()

????{

????????//?Loop?until?the?writer?thread?ends

????????for?(int?i=0;?writer.IsAlive;?i++)?{

????????????int?sum?=?0;

????????????//?Sum?the?values?in?the?buffer

????????????rwlock.AcquireReaderLock?(Timeout.Infinite);

????????????try?{

????????????????for?(int?k=0;?k<100;?k++)

????????????????????sum?+=?buffer[k];

????????????}

????????????finally?{ ????????????????rwlock.ReleaseReaderLock?(); ????????????} ????????????//?Report?an?error?if?the?sum?is?incorrect ????????????if?(sum?!=?5050)?{ ????????????????string?message?=?String.Format?("Thread?{0} " + ??????????????????? "reports?a?corrupted?read?on?iteration?{1}", ?????????????????????Thread.CurrentThread.Name,?i?+?1); ????????????????Console.WriteLine?(message); ????????????????writer.Abort?(); ????????????????return; ????????????} ????????} ????} ????static?void?WriterFunc?() ????{ ????????DateTime?start?=?DateTime.Now; ????????//?Loop?for?up?to?10?seconds ????????while?((DateTime.Now?-?start).Seconds?<?10)?{ ????????????int?j?=?rng.Next?(0,?100); ????????????int?k?=?rng.Next?(0,?100); ????????????rwlock.AcquireWriterLock?(Timeout.Infinite); ????????????try?{ ????????????????Swap?(ref?buffer[j],?ref?buffer[k]); ????????????} ????????????finally?{ ????????????????rwlock.ReleaseWriterLock?(); ????????????} ????????} ????} ????static?void?Swap?(ref?byte?a,?ref?byte?b) ????{ ????????byte?tmp?=?a; ????????a?=?b; ????????b?=?tmp; ????} }Figure 14-12

A potential gotcha to watch out for regarding ReaderWriterLock has to do with threads that need writer locks while they hold reader locks. In the following example, a thread first acquires a reader lock and then later decides to grab a writer lock, too:

rwlock.AcquireReaderLock?(Timeout.Infinite);

try?{

????//?TODO:?Read?from?the?resource?guarded?by?the?lock

??????.

??????.

??????.

????//?Oops!?Need?to?do?some?writing,?too

????rwlock.AcquireWriterLock?(Timeout.Infinite);

????try?{

????????//?TODO:?Write?to?the?resource?guarded?by?the?lock

??????????.

??????????.

??????????.

????}

????finally?{

????????rwlock.ReleaseWriterLock?();

????}

}

finally?{

????rwlock.ReleaseReaderLock?();

}

The result? Deadlock. ReaderWriterLock supports nested calls, which means it’s perfectly safe for the same thread to request a read lock or write lock as many times as it wants. That’s essential for threads that call methods recursively. But if a thread holding a read lock requests a write lock, it locks alright—it locks forever. In this example, the call to AcquireWriterLock disappears into the framework and never returns.

The solution is a pair of ReaderWriterLock methods named UpgradeTo-WriterLock and DowngradeFromWriterLock, which allow a thread that holds a reader lock to temporarily convert it to a writer lock. Here’s the proper way to nest reader and writer locks:

rwlock.AcquireReaderLock?(Timeout.Infinite);

try?{

????//?TODO:?Read?from?the?resource?guarded?by?the?lock

??????.

??????.

??????.

????LockCookie?cookie?=?rwlock.UpgradeToWriterLock?(Timeout.Infinite);

????try?{

????????//?TODO:?Write?to?the?resource?guarded?by?the?lock

??????????.

??????????.

??????????.

????}

????finally?{

????????rwlock.DowngradeFromWriterLock?(ref?cookie);

????}

}

finally?{

????rwlock.ReleaseReaderLock?();

}

Now the code will work as intended, and it won’t bother end users with pesky infinite loops.

There is some debate in the developer community about how efficient ReaderWriterLock is and whether it’s vulnerable to locking out some threads entirely in extreme situations. Traditional reader/writer locks give writers precedence over readers and never deny a writer thread access, even if reader threads have been blocking longer. ReaderWriterLock, however, attempts to divide time more equitably between reader and writer threads. If one writer thread and several reader threads are waiting for the lock to come free, ReaderWriterLock may let one or more readers run before the writer. The jury is still out on whether this design is good or bad. Microsoft is actively investigating the implications and might change ReaderWriterLock’s behavior in future versions of the .NET Framework. Stay tuned for late-breaking news.

The word “mutex” is a contraction of the words “mutually exclusive.” A mutex is a synchronization object that guards a resource and prevents it from being accessed by more than one thread at a time. It’s similar to a monitor in that regard. Two fundamental differences, however, distinguish monitors and mutexes:

-

Mutexes have the power to synchronize threads belonging to different applications and processes; monitors do not.

-

If a thread acquires a mutex and terminates without freeing it, the system deems the mutex to be abandoned and automatically frees it. Monitors are not afforded the same protection.

The FCL’s System.Threading.Mutex class represents mutexes. The following statement creates a Mutex instance:

Mutex?mutex?=?new?Mutex?();

These statements acquire the mutex prior to accessing the resource that it guards and release it once the access is complete:

mutex.WaitOne?();

try?{

??.

??.

??.

}

finally?{

????mutex.ReleaseMutex?();

}

As usual, the finally block ensures that the mutex is released even if an exception occurs while the mutex is held.

You could easily demonstrate mutexes at work by rewiring Monitor.cs or ReaderWriterLock.cs to use a mutex instead of a monitor or reader/writer lock. But to do so would be to misrepresent the purpose of mutexes. When used to synchronize threads in the same application, mutexes are orders of magnitude slower than monitors. The real power of mutexes lies in reaching across application boundaries. Suppose two different applications communicate with each other using shared memory. They can use a mutex to synchronize accesses to the memory that they share, even though the threads performing the accesses belong to different applications and probably to different processes as well. The secret is to have each application create a named mutex and for each to use the same name:

//?Application?A

Mutex?mutex?=?new?Mutex?("StevieRayVaughanRocks");

//?Application?B

Mutex?mutex?=?new?Mutex?("StevieRayVaughanRocks");

Even though two different Mutex objects are created in two different memory spaces, both refer to a common mutex object inside the operating system kernel. Therefore, if a thread in application A calls WaitOne on its mutex and the mutex kernel object is owned by a thread in application B, A’s thread will block until the mutex comes free. You can’t do that with a monitor, nor can you do it with a ReaderWriterLock.

Since Mutex wraps an unmanaged resource—a mutex kernel object—it also provides a Close method for closing the underlying handle representing the object. If you create and destroy Mutex objects on the fly, be sure to call Close on them the moment you’re done with them to prevent objects from piling up unabated in the operating system kernel.

When I speak about events at conferences and in classes, I like to describe them as “software triggers” or “thread triggers.” Whereas monitors, mutexes, and reader/writer locks are used to guard access to resources, events are used to coordinate the actions of multiple threads in a more general way, ensuring that each thread does its thing in the proper sequence with regard to the other threads. As a simple example, suppose that thread A fills a buffer with data that it gathers and thread B is charged with the task of reading data from the buffer and doing something with it. Thread A doesn’t want thread B to begin reading until the buffer is prepared, so it sets an event object when the buffer is ready. Thread B, meanwhile, blocks on the event waiting for it to become set. Until A sets the event, B blocks in an efficient wait state. The moment A sets the event, B comes out of its blocked state and begins reading from the buffer.

Windows supports two different types of events: auto-reset events and manual-reset events. The .NET Framework class library wraps these operating system kernel objects with classes named AutoResetEvent and ManualResetEvent. Both classes feature methods named Set, Reset, and WaitOne for setting an event, resetting an event, and blocking until an event becomes set, respectively. If called on an event that is currently reset, WaitOne blocks until another thread sets the event. If called on an event that is already set, WaitOne returns immediately. An event that is set is sometimes said to be signaled. The reset state is also called the nonsignaled state.

The difference between AutoResetEvent and ManualResetEvent is what happens following a call to WaitOne. The system automatically resets an AutoResetEvent, hence the name. The system doesn’t automatically reset a ManualResetEvent; a thread must reset it manually by calling the event’s Reset method. This seemingly minor behavioral difference has far-reaching implications for your code. AutoResetEvents are generally used when just one thread calls WaitOne. Since an AutoResetEvent is reset before the call to WaitOne returns, it’s only capable of signaling one thread at a time. One call to Set on a ManualResetEvent, by contrast, is sufficient to signal any number of threads that call WaitOne. For this reason, ManualResetEvent is typically used to trigger multiple threads.

To serve as an example of events at work, the console application in Figure 14-13 uses two threads to write alternating even and odd numbers to the console, beginning with 1 and ending with 100. One thread writes even numbers; the other writes odd numbers. Left unsynchronized, the threads would blow their output to the console window as quickly as possible and produce a random mix of even and odd numbers (or, more than likely, a long series of evens followed by a long series of odds, or vice versa, because one thread might run out its lifetime before the other gets a chance to execute). With a little help from a pair of AutoResetEvents, however, the threads can be coerced into working together.

using?System;

using?System.Threading;

class?MyApp

{

????static?AutoResetEvent?are1?=?new?AutoResetEvent?(false);

????static?AutoResetEvent?are2?=?new?AutoResetEvent?(false);

????static?void?Main?()

????{

????????try?{

????????????//?Create?two?threads

????????????Thread?thread1?=

????????????????new?Thread?(new?ThreadStart?(ThreadFuncOdd));

????????????Thread?thread2?=

????????????????new?Thread?(new?ThreadStart?(ThreadFuncEven));

????????????//?Start?the?threads

????????????thread1.Start?();

????????????thread2.Start?();

????????????//?Wait?for?the?threads?to?end

????????????thread1.Join?();

????????????thread2.Join?();

????????}

????????finally?{

????????????//?Close?the?events

????????????are1.Close?();

????????????are2.Close?();

????????}

????}

????static?void?ThreadFuncOdd?()

????{

????????for?(int?i=1;?i<=99;?i+=2)?{

????????????Console.WriteLine?(i);??//?Output?the?next?odd?number

????????????are1.Set?();????????????//?Release?the?other?thread

????????????are2.WaitOne?();????????//?Wait?for?the?other?thread

????????}

????}

????static?void?ThreadFuncEven?()

????{

????????for?(int?i=2;?i<=100;?i+=2)?{

????????????are1.WaitOne?();????????//?Wait?for?the?other?thread

????????????Console.WriteLine?(i);??//?Output?the?next?even?number

????????????are2.Set?();????????????//?Release?the?other?thread

????????}

????}

}

Here’s how the sample works. At startup, it launches two threads: one that outputs odd numbers and one that outputs even numbers. The “odd” thread writes a 1 to the console window. It then sets an AutoResetEvent object named are1 and blocks on an AutoResetEvent object named are2:

Console.WriteLine?(i); are1.Set?(); are2.WaitOne?();

The “even” thread, meanwhile, blocks on are1 right out of the gate. When WaitOne returns because the odd thread set are1, the even thread outputs a 2, sets are2, and loops back to call WaitOne again on are1:

are1.WaitOne?(); Console.WriteLine?(i); are2.Set?();

When the even thread sets are2, the odd thread comes out of its call to WaitOne, and the whole process repeats. The result is a perfect stream of numbers in the console window.

Though this sample borders on the trivial, it’s entirely representative of how events are typically used in real applications. They’re often used in pairs, and they’re often used to sequence the actions of two or more threads.

Note the finally block in Main that calls Close on the AutoResetEvent objects. Events, like mutexes, wrap Windows kernel objects and should therefore be closed when they’re no longer needed. Technically, the calls to Close are superfluous here because the events are automatically closed when the application ends, but I included them anyway to emphasize the importance of closing event objects.

Occasionally a thread needs to block on multiple synchronization objects. It might, for example, need to block on two or more AutoResetEvents and come alive when any of the events becomes set to perform some action on behalf of the thread that did the setting. Or it could block on several AutoResetEvents and want to remain blocked until all of the events become set. Both goals can be accomplished using WaitHandle methods named WaitAny and WaitAll.

WaitHandle is a System.Threading class that serves as a managed wrapper around Windows synchronization objects. Mutex, AutoResetEvent, and ManualResetEvent all derive from it. When you call WaitOne on an event or a mutex, you’re calling a method inherited from WaitHandle. WaitAny and WaitAll are static methods that enable a thread to block on several (on most platforms, up to 64) mutexes and events at once. They expose the same functionality to managed applications that the Windows API function WaitForMultipleObjects exposes to unmanaged applications. In the following example, the calling thread blocks until one of the three AutoResetEvent objects in the syncobjects array becomes set:

AutoResetEvent?are1?=?new?AutoResetEvent?(false);

AutoResetEvent?are2?=?new?AutoResetEvent?(false);

AutoResetEvent?are3?=?new?AutoResetEvent?(false);

??.

??.

??.

WaitHandle[]?syncobjects?=?new?WaitHandle[3]?{?are1,?are2,?are3?};

WaitHandle.WaitAny?(syncobjects);

Changing WaitAny to WaitAll blocks the calling thread until all of the AutoResetEvents are set:

WaitHandle.WaitAll?(syncobjects);

WaitAny and WaitAll also come in versions that accept time-out values. Time-outs can be expressed as integers (milliseconds) or TimeSpan values.

Should you ever need to interrupt a thread while it waits for one or more synchronization objects to become signaled, use the Thread class’s Interrupt method. Interrupt throws a ThreadInterruptedException in the thread it’s called on. It works only on threads that are waiting, sleeping, or suspended; call it on an unblocked thread and it interrupts the thread the next time the thread blocks. A common use for Interrupt is getting the attention of a thread that’s blocking inside a call to WaitAll after the application has determined that one of the synchronization objects will never become signaled.

The vast majority of the classes in the .NET Framework class library are not thread-safe. If you want to share an ArrayList between a reader thread and a writer thread, for example, it’s important to synchronize access to the ArrayList so that one thread can’t read from it while another thread writes to it.

One way to synchronize access to an ArrayList is to use a monitor, as demonstrated here:

//?Create?the?ArrayList

ArrayList?list?=?new?ArrayList?();

??.

??.

??.

//?Thread?A

lock?(list)?{

????//?Add?an?item?to?the?ArrayList

????list.Add?("Fender?Stratocaster");

}

??.

??.

??.

//?Thread?B

lock?(list)?{

????//?Read?the?ArrayList's?last?item

????string?item?=?(string)?list[list.Count?-?1];

}

However, there’s an easier way. ArrayList, Hashtable, Queue, Stack, and selected other FCL classes implement a method named Synchronized that returns a thread-safe wrapper around the object passed to it. Here’s the proper way to serialize reads and writes to an ArrayList:

//?Create?the?ArrayList?and?a?thread-safe?wrapper?for?it

ArrayList?list?=?new?ArrayList?();

ArrayList?safelist?=?ArrayList.Synchronized?(list);

??.

??.

??.

//?Thread?A

safelist.Add?("Fender?Stratocaster");

??.

??.

??.

//?Thread?B

string?item?=?(string)?safelist[safelist.Count?-?1];

Using thread-safe wrappers created with the Synchronized method shifts the burden of synchronization from your code to the framework. It can also improve performance because a well-designed wrapper class can use its knowledge of the underlying class to lock only when necessary and for no longer than required.

Different collection classes implement different threading behaviors. For example, a Hashtable can safely be used by one writer thread and unlimited reader threads right out of the box. Synchronization is only required when there are two or more writer threads. A Queue, on the other hand, doesn’t even support simultaneous reads because the very act of reading from a Queue modifies its contents and internal data structures. Therefore, you should use a synchronized wrapper whenever multiple threads access a Queue, regardless of whether the threads are readers or writers.

Thread-safe wrappers returned by Synchronized guarantee the atomicity of individual method calls but offer no such assurances for groups of method calls. In other words, if you create a thread-safe wrapper around an ArrayList, multiple threads can safely access the ArrayList through the wrapper. If, however, a reader thread iterates over an ArrayList while a writer thread writes to it, the ArrayList’s contents could change even as they’re being enumerated.

Treating multiple reads and writes as atomic operations requires external synchronization. Here’s the proper way to externally synchronize ArrayLists and other types that implement ICollection:

//?Create?the?ArrayList

ArrayList?list?=?new?ArrayList?();

??.

??.

??.

//?Thread?A

lock?(list.SyncRoot)?{

????//?Add?two?items?to?the?ArrayList

????list.Add?("Fender?Stratocaster");

????list.Add?("Gibson?SG");

}

??.

??.

??.

//?Thread?B

lock?(list.SyncRoot)?{

????//?Enumerate?the?ArrayList's?items

????foreach?(string?item?in?list)?{

??????...

????}

}

The argument passed to lock isn’t the ArrayList itself but rather an ArrayList property named SyncRoot. SyncRoot is a member of the ICollection interface. If called on a raw collection class instance, SyncRoot returns this. If called on a thread-safe wrapper class created with Synchronized, SyncRoot returns a reference to the object that the wrapper wraps. That’s important, because adding synchronization to an already synchronized object impedes performance. Synchronizing on SyncRoot makes your code generic and allows it to perform equally well with synchronized and unsynchronized objects.

The .NET Framework offers a simple and easy-to-use means for synchronizing access to entire methods through the MethodImplAttribute class, which belongs to the System.Runtime.CompilerServices namespace. To prevent a method from being executed by more than one thread at a time, decorate it as shown here:

[MethodImpl?(MethodImplOptions.Synchronized)]

byte[]?TransformData?(byte[]?buffer)

{

??...

}

Now the framework will serialize calls to TransformData. A method synchronized in this manner closely approximates the classic definition of a critical section—a section of code that can’t be executed simultaneously by concurrent threads.